Google Seeks to Increase Transparency in AI Art with Invisible Digital Watermark

Google DeepMind has introduced SynthID, a tool designed to enhance transparency in AI-generated images. SynthID, a watermarking and identification tool for generative art, enables the embedding of a digital watermark onto an image’s pixels, which remains invisible to the human eye. Initially, SynthID will be made available to a select group of customers utilizing Imagen, Google’s cloud-based AI art generator.

One of the many problems with generative art – in addition to the ethical implications of the training of artists involved in their work – is the potential for deep fakes. For example, the Pope’s hot new hip-hop outfit (an AI image created on MidJourney) that went viral on social media was an early example of what could become commonplace as generative tools evolve. It doesn’t take much imagination to see how political ads using AI-generated art can do a lot more damage than a funny picture circulating on Twitter. “Watermarking audio and visual content to make it clear that the content is AI-generated” was one of the voluntary commitments seven AI companies agreed to develop after a meeting at the White House in July. Google is the first company to launch such a system.

Google won’t get too far into the weeds of SynthID’s technical implementation (likely to prevent workarounds), but it does say that the watermark can’t be easily removed with simple editing techniques. “Finding the right balance between stealth and robustness of image manipulations is difficult,” the company wrote in a DeepMind blog post published today. “We designed SynthID so that it does not degrade the image quality and allows the watermark to remain detectable even after modifications such as adding filters, changing colors and saving with different lossy compression methods – the most commonly used JPEG files,” DeepMind’s SynthID project leaders. Sven Gowal and Pushmeet Kohli wrote.

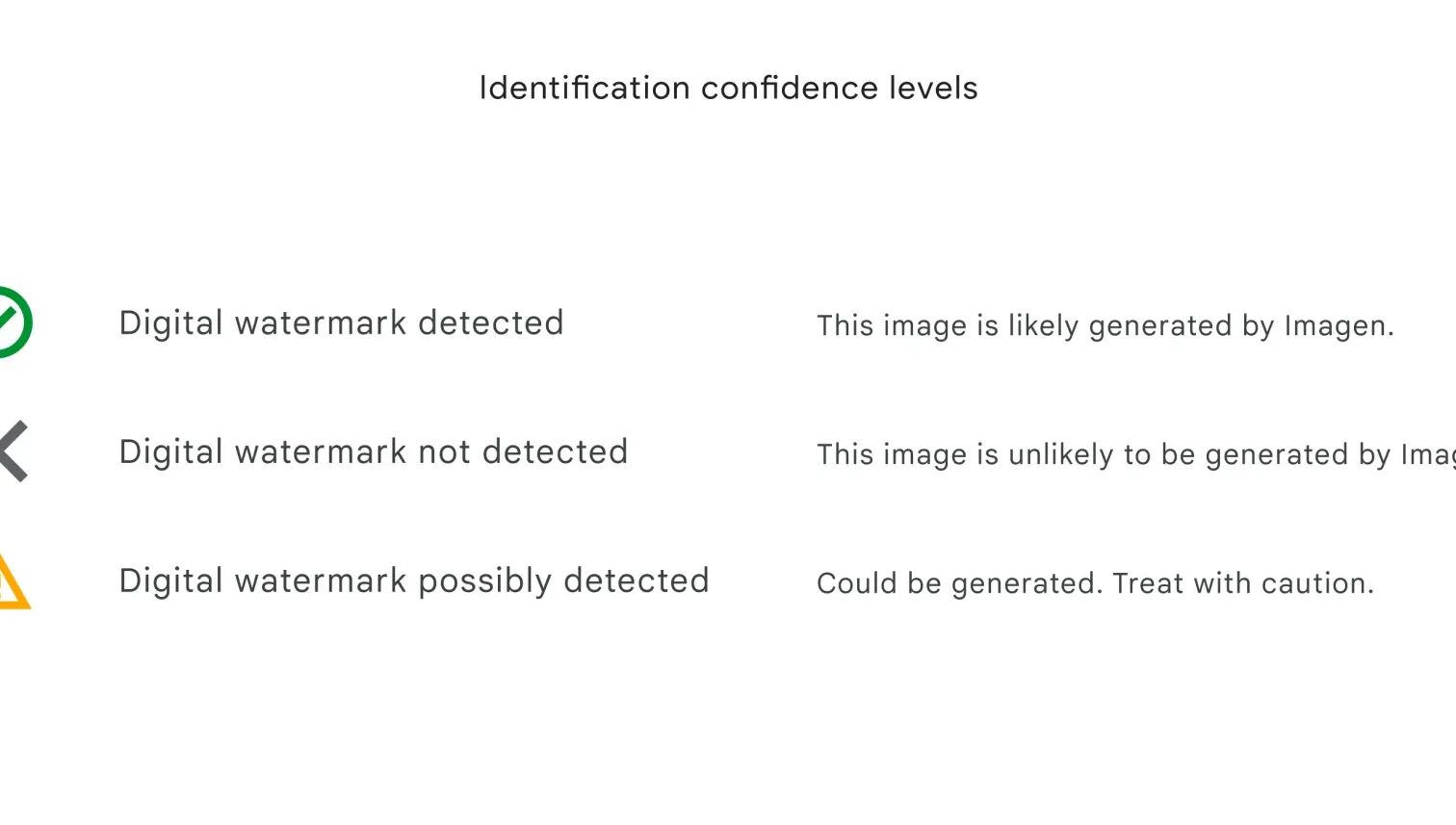

The detection part of SynthID classifies the image based on three digital watermark confidence levels: detected, not detected, and possibly detected. Because the tool is embedded in the pixels of an image, Google says its system can work alongside metadata-based approaches, such as the creative features of Adobe’s Photoshop, which are currently available in open beta.

SynthID includes a pair of deep learning models: one for watermarking and one for identification. Google says that they trained on different pictured, images with Google, which resulted in a combined ML model. “The combined model is optimized for several goals, including correctly identifying watermarked content and improving stealth by visually aligning the watermark with the original content,” Gowal and Kohli wrote.

Google admitted it’s not a perfect solution, adding that it’s “not foolproof against extreme image manipulation.” But it describes watermarking as “a promising technical approach that enables people and organizations to work responsibly with AI-generated content.” The company says the tool could expand to other AI models, including those for generating text (like ChatGPT), video and audio.

While watermarks can help with deep counterfeiting, it’s easy to imagine digital watermarking becoming an arms race with hackers, and services using SynthID require constant updates. Additionally, the open-source nature of Stable Diffusion, one of the leading generation tools, can make the industry-wide adoption of SynthID or any similar solution a major challenge: It already has countless custom builds that can be used on local computers. wild. Regardless, Google hopes to make SynthID available to third parties in the “near future,” at least to improve AI transparency across the industry.